Join our comprehensive webinar on "Building an Optimal LLM Evaluation Stack" to learn how to create a robust framework for evaluating and optimizing large language models. Discover best practices, tools, and strategies to ensure your LLMs deliver top quality, reliability, and efficiency.

This session is designed for technical professionals who want to implement comprehensive evaluation systems for their LLM applications, covering everything from initial setup to advanced optimization techniques.

Key Learning Objectives

- Understand the core components of an effective LLM evaluation stack.

- Learn about different evaluation metrics and when to use them.

- Discover tools and frameworks for automated evaluation.

- Implement continuous evaluation practices in production environments.

- Optimize evaluation costs while maintaining high quality.

- Build scalable evaluation pipelines that grow with your application.

Who Should Attend

- ML Engineers implementing LLM evaluation systems.

- Data Scientists working with language models.

- Technical Leads planning AI evaluation strategies.

- DevOps Engineers managing ML pipelines.

- Anyone interested in LLM quality assurance.

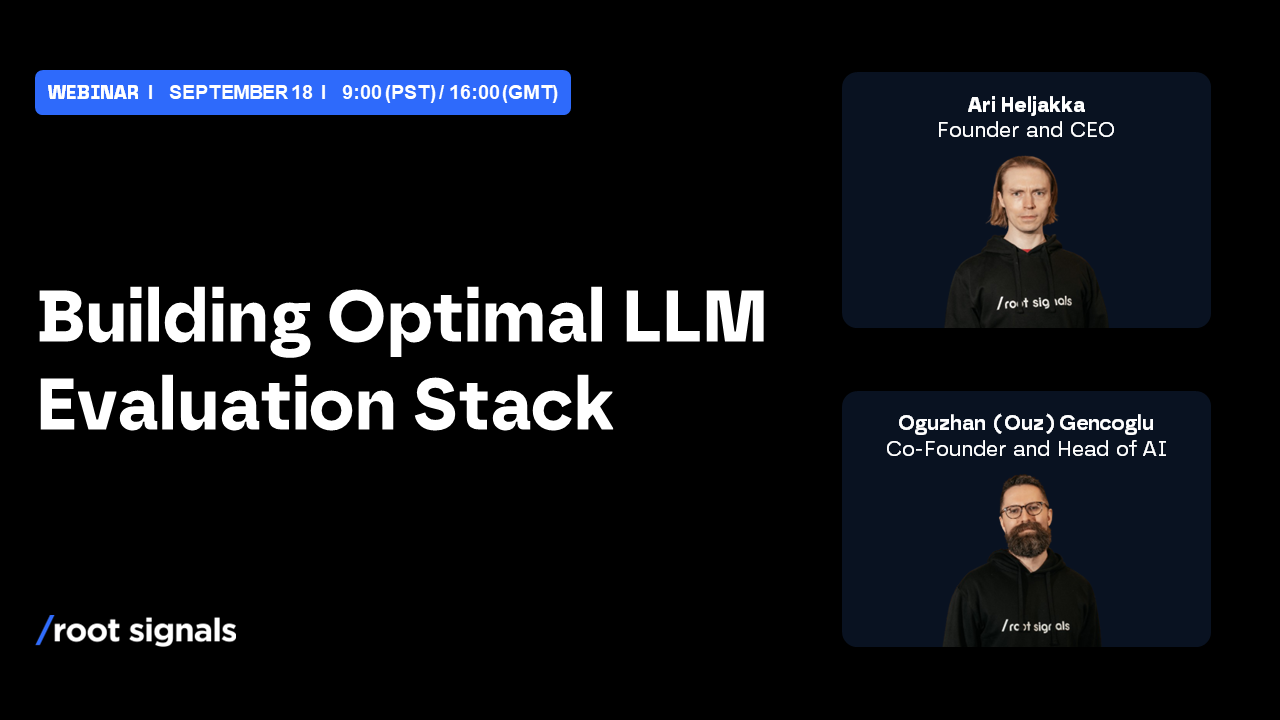

About the Presenter

This webinar is presented by Scorable' team of experts who have extensive experience in building and deploying LLM evaluation systems. The insights shared are based on real-world implementations and best practices from various industries.