Will Agent Evaluation via MCP Stabilize Agent Frameworks?

Discover how exposing complex AI Evaluation frameworks to agents via MCP (Model Context Protocol) allows for a new paradigm of controllable self-improvement.

Watch recording →Learn from experts, watch demos, and stay updated on the latest in AI quality and safety.

Discover how exposing complex AI Evaluation frameworks to agents via MCP (Model Context Protocol) allows for a new paradigm of controllable self-improvement.

Watch recording →

explore the dual themes of agent evaluations and EvalOps in this comprehensive technical session on cost-effective LLM judges.

Watch recording →

A comprehensive look at agent evaluation frameworks and methodologies, delivered at the AI Engineer Summit: Agents at Work!

Watch recording →

Dive deep into the intricacies of LLM-based applications and learn to detect, block, and remedy the most common yet critical errors that undermine reliability.

Watch recording →

Debunking common myths and misconceptions about implementing LLM judges in production environments based on real-world experience.

Watch recording →

A comprehensive keynote on operational excellence in LLM evaluation and judgment systems.

Watch recording →

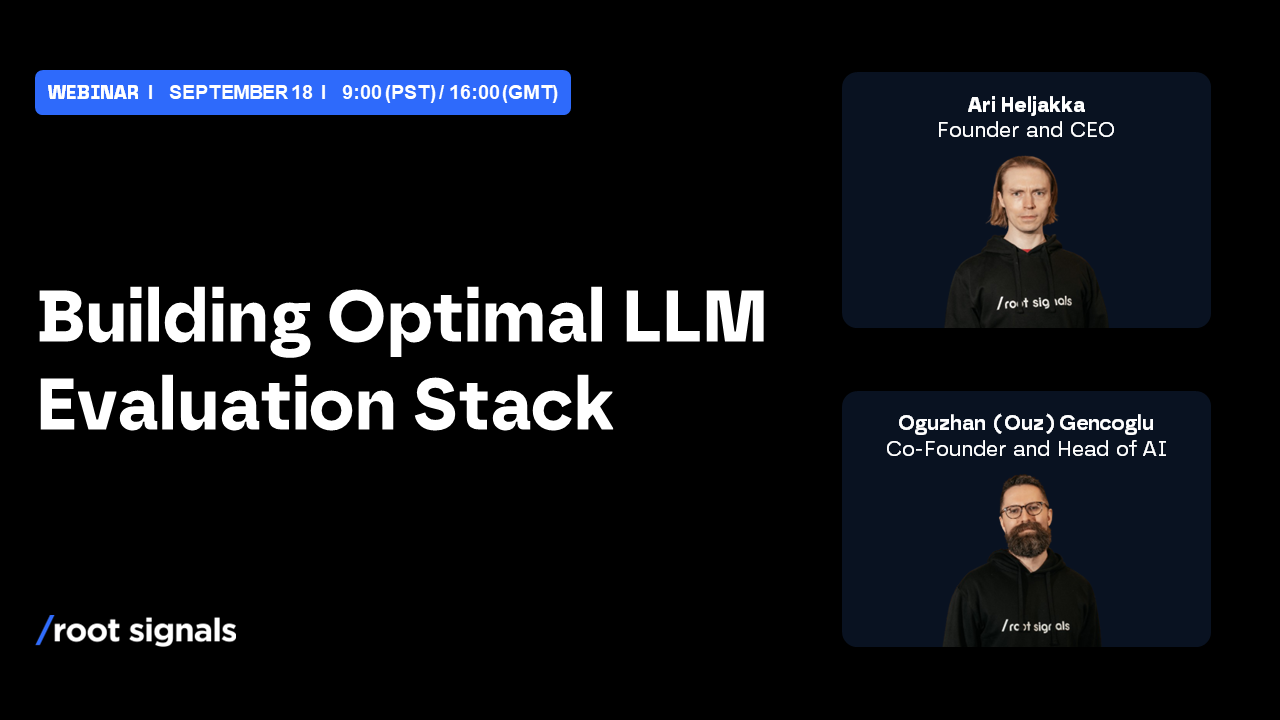

Learn how to create a robust framework for evaluating and optimizing large language models, covering best practices, tools, and strategies for production reliability.

Watch recording →