At Scorable, we believe the answer lies in personalized evaluation. That's why we built Scorable, an AI evaluation tool designed to help teams create custom evaluation stacks for their Large Language Model (LLM) applications. We believe only AI can reliably evaluate AI.

In this article, we'll explain how Scorable works and why it's essential for anyone building AI-powered products.

Why Is AI Evaluation Important?

Even the best AI models are prone to hallucinations, inconsistencies, and unreliable outputs. For example:

- A tourism chatbot might share incorrect historical information.

- A health assistant might use too much jargon for patients.

- An AI interview coach might fail to provide fair or engaging feedback.

Without evaluation, you can't measure or improve these issues. Traditional benchmarks are too general and may not apply to every case. Each use case has unique needs—and that's where Scorable comes in.

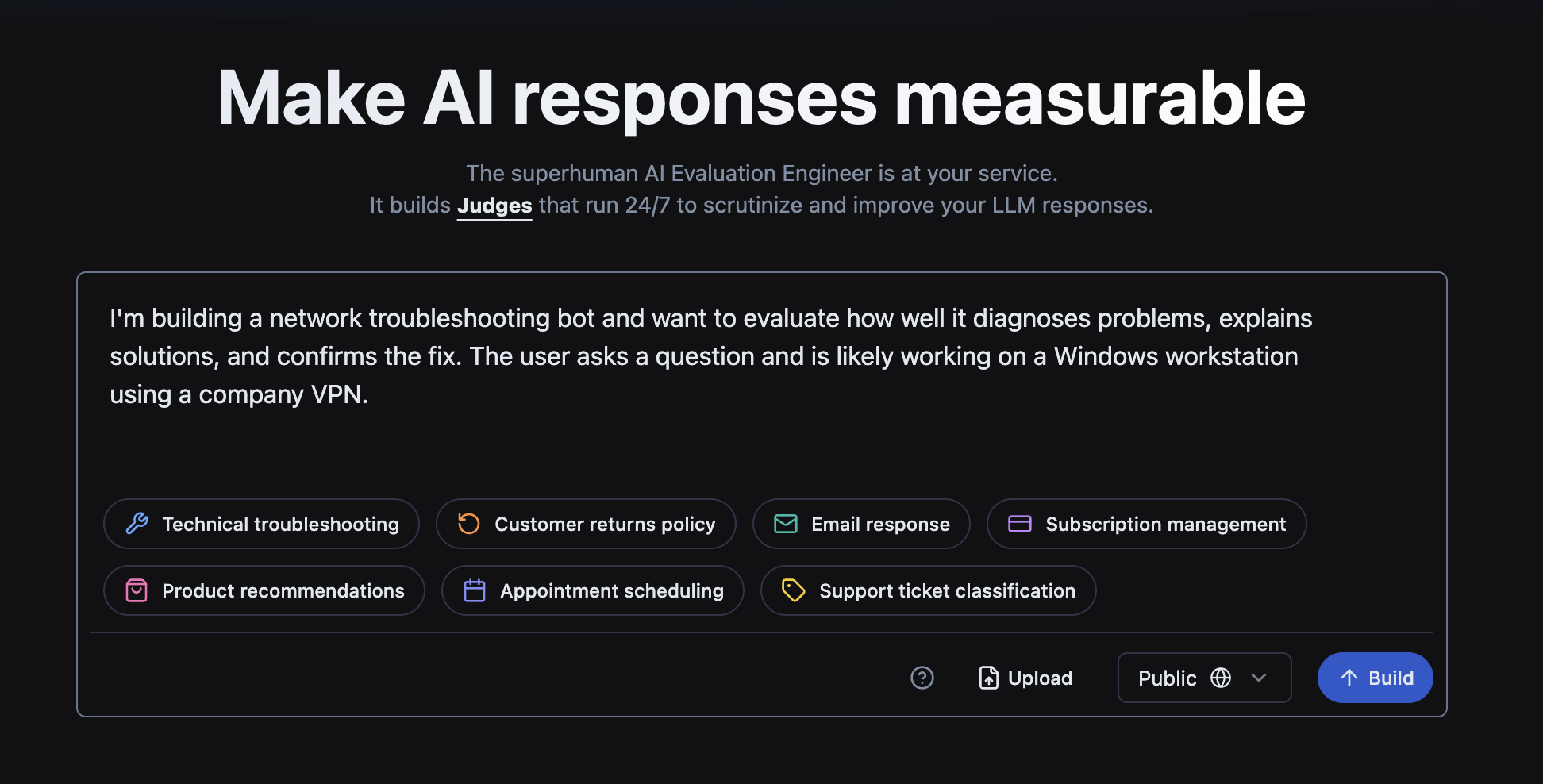

What Is Scorable?

Scorable builds your evaluation stack for you — in minutes, not months. With smart defaults and ready-made evaluators, you start measuring what matters right away, and only tweak when you want to.

Some example metrics you can include:

- Accuracy: How correct are the answers?

- Usefulness: Do users find the responses helpful?

- Factual correctness: Are the outputs based on true information?

- Expression quality: Is the tone engaging and aligned with your brand?

From Draft to Personalized Evaluation

In our demo, we showed Scorable working with an AI-powered job interview coach.

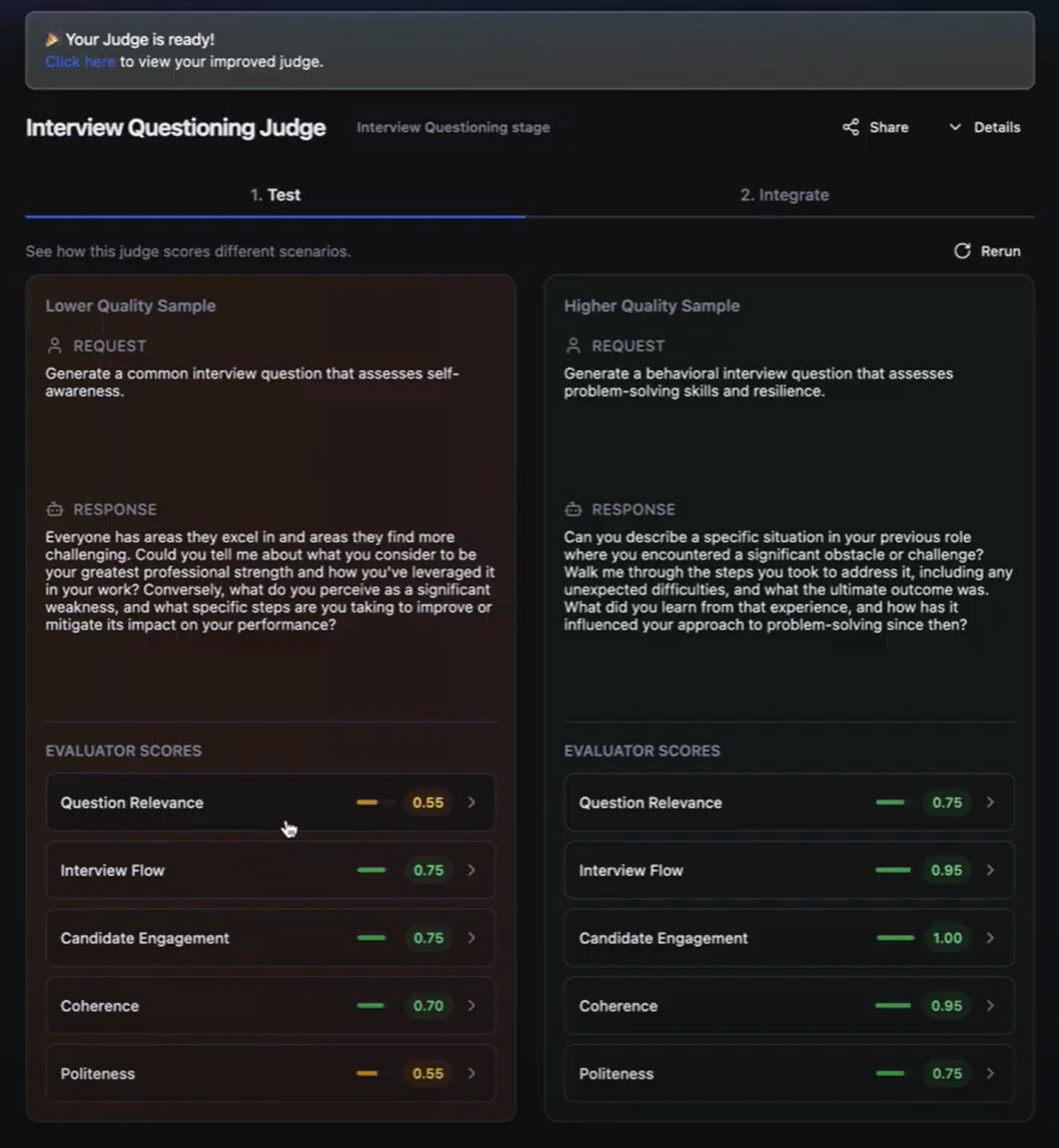

- First draft: An evaluation is generated with two example scenarios and basic metrics.

- Automatic improvement: Scorable creates a better version in the background, with more realistic scenarios and a more complete evaluation stack.

- Fine-tuning: You can add job descriptions, interview styles, and policy documents to align the evaluation more closely with your needs.

This process ensures that your AI is not only tested—but tested against the right criteria.

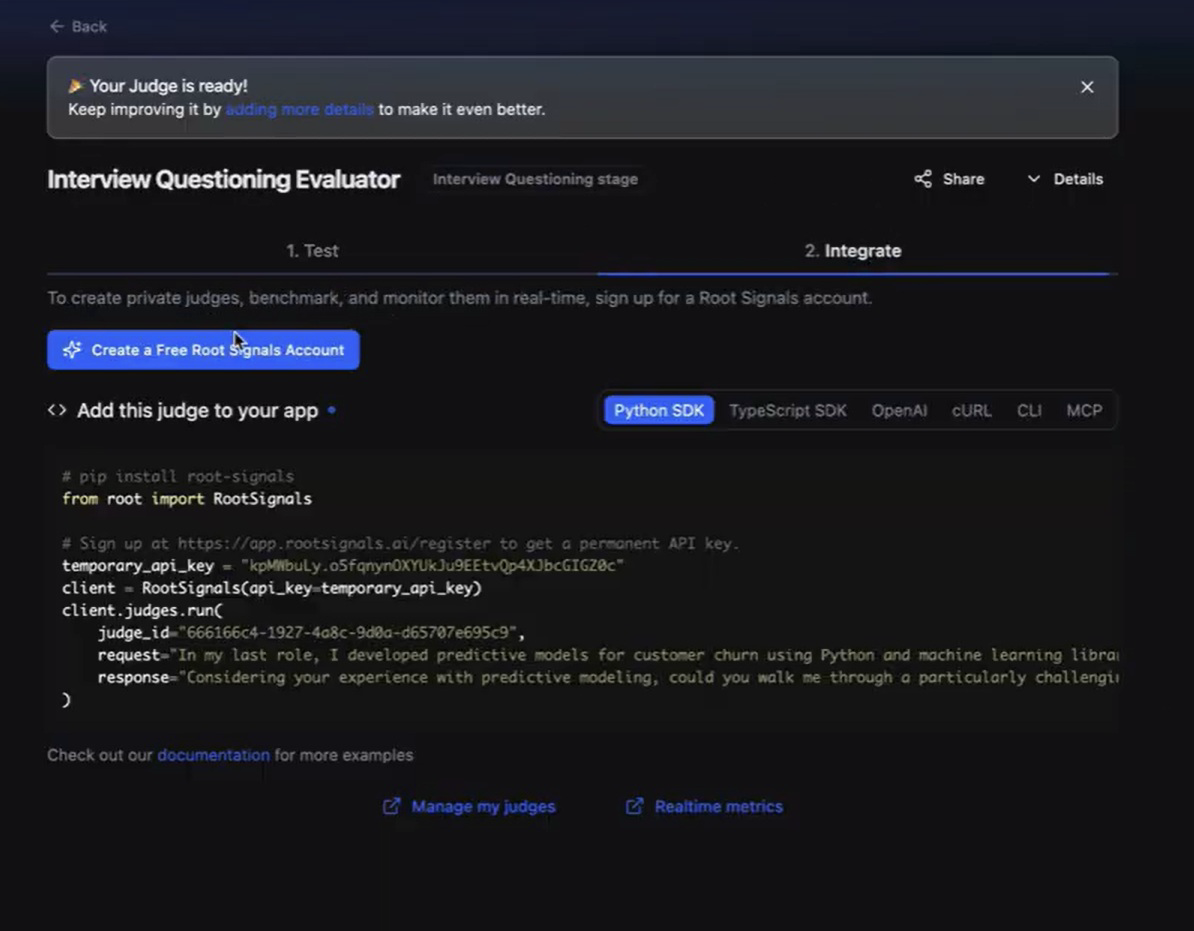

Easy Integration with Scorable

Once your evaluation stack is ready, integration is simple. With just a few lines of code, Scorable connects to your application and automatically starts evaluating its outputs. And we provide that code snippet right on the same page. You can choose Python or OpenAI or the program that suits you best.

This creates a continuous feedback loop, giving you insights into:

- Where your AI performs well

- Where it needs improvement

- How it evolves over time

Why Does AI Auditing Matter?

Trust is the foundation of AI adoption. By personalizing evaluation with Scorable, you can:

- Build trust in your AI-powered products

- Catch weaknesses before users do

- Ensure your applications meet both technical and business goals

In short, Scorable helps you move beyond trial and error and toward production-ready, reliable AI.

If you're developing with AI, don't just trust your model—measure it with Scorable and prevent issues before they happen. Get Scorable for AI applications without hallucinations.